Data-driven Head Motion Generation through Natural Gaze-Head Coordination

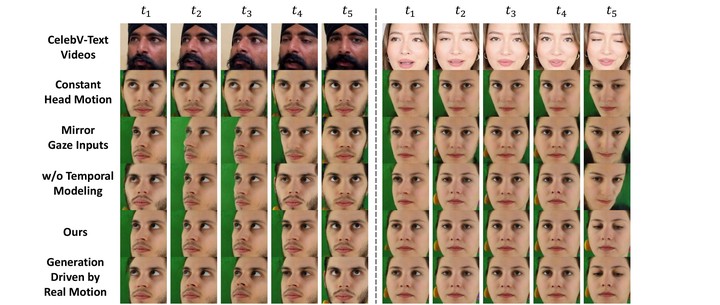

Qualitative comparison of facial videos showing five consecutive frames.

Qualitative comparison of facial videos showing five consecutive frames.

Abstract

We present the first data-driven approach to model temporal gaze-head coordination from large-scale in-the-wild facial videos. To obtain training data for generalizable learning, we propose an automatic pipeline that extracts natural yet diverse gaze and head motions with off-the-shelf appearance-based gaze estimators. To capture the probabilistic correlation and temporal dynamics of gaze-head coordination, we build our model on a generative conditional Variational Autoencoder for plausible yet diverse gaze-conditioned head motion generations. We further apply our framework to gaze-controlled facial video generation, where we enable video generation with natural and realistic head motion correlated to the input gaze - an aspect that has not been emphasized before. Human evaluation and quantitative comparisons demonstrate our method’s effectiveness and validate our design choices, with evaluators showing statistically significant preference for our approach over baseline methods.